Text-Guided Image Inpainting

Introduction

This blog post describes a machine learning tool to edit images through text-based prompts. The user specifies the object(s) to be removed (e.g., “chair”) and optionally what it should be replaced with (e.g., “stool”). This inpainting tool is completely text-guided, so the user does not need to draw or provide a mask image of the areas to be edited. Instead, the mask image is automatically generated from the arbitrary text provided in the prompt.

Inpainting traditionally refers to restoration of damaged or deteriorated artwork. Here, I am using the term to refer to image editing, specifically the replacement of unwanted elements with desired elements.

To create this tool, I utilized two models: image segmentation model + diffusion model. I’ll describe these models in more depth in the Models section. Note that there was no actual training or fine-tuning done by me — I was simply running these models in inference mode.

Code

To run this inpainting tool, please check out my code on GitHub.

The best way to get started is by opening up quickstart.ipynb (from my GitHub repo) in Google Colab and starting a session with GPU. All of the supporting functions can be found in masking_inpainting.py.

Models

This inpainting tool utilizes an image segmentation model, which is text-prompted and selects the object for removal in the form of a mask image. The mask image and a prompt of the desired replacement are then fed into a diffusion model to create the final output image.

I used an image segmentation model called CLIPSeg (GitHub | Paper). This model can perform image segmentation of arbitrary text prompts at test time. It uses OpenAI’s pre-trained CLIP model (GitHub | Paper) to convert a text prompt and user-inputted image into CLIP embeddings, which then get fed into a decoder. Here is a great diagram explaining how the model works.

Then I used a latent text-to-image diffusion model: Stable-Diffusion-Inpainting (HuggingFace Model Card), which was initialized with Stable-Diffusion-v-1-2 weights and given inpainting training. If you aren’t familiar with diffusion models or using HuggingFace’s Diffusers library, this is a great blog post to learn more. Using the diffusion model, the inpainting tool takes the user-inputted image, mask image (from CLIPSeg), and inpainting prompt and outputs the final image.

Examples

Removing An Object (Squirrel)

In this example, we’re removing an object only (no specified replacement). The input prompt is “squirrel” which indicates the object for removal.

input_filepath = 'images/squirrel.jpg'

mask_prompt = 'squirrel'

inpaint_prompt = ''

Here’s a side-by-side image of the user-provided photo (on the left) and the output photo (on the right):

The squirrel has been removed from the photo, and the background has been filled in to match the rest of the photo.

Changing Color (Roses)

Now, let’s try changing the color of an object. The input prompt is “white roses” for the object to be removed, and “red roses” for its replacement.

input_filepath = 'images/roses.jpg'

mask_prompt = 'white roses'

inpaint_prompt = 'red roses'

Side-by-side image of the user-provided photo and the output photo:

All of the roses are red now, and the background is filled in nicely to match the brown window coverings.

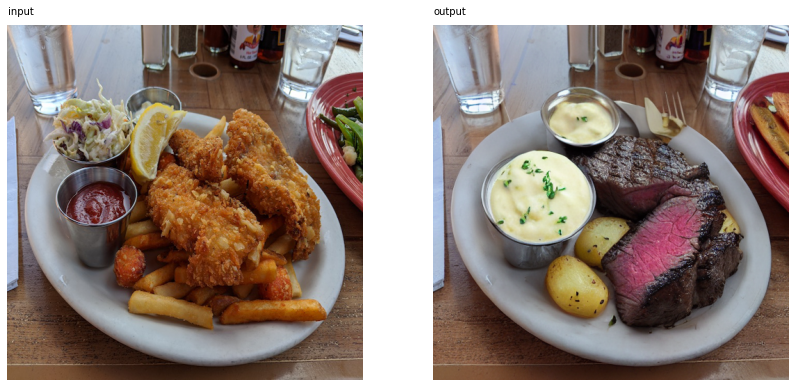

Replacing Objects (Food)

This example replaces an object with a similar one. In this case, we’re replacing all of the food on a plate (prompt: “food”) with some other kind of food (prompt: “steak and potatoes”).

input_filepath = 'images/food.jpg'

mask_prompt = 'food'

inpaint_prompt = 'steak and potatoes'

Side-by-side image of the user-provided photo and the output photo:

This is an interesting example, as the algorithm has found different kinds of potato to appropriately place in different areas of the photo. There are potato chunks on the white plate, mashed potato in the metal saucers, and potato skins on the red plate.

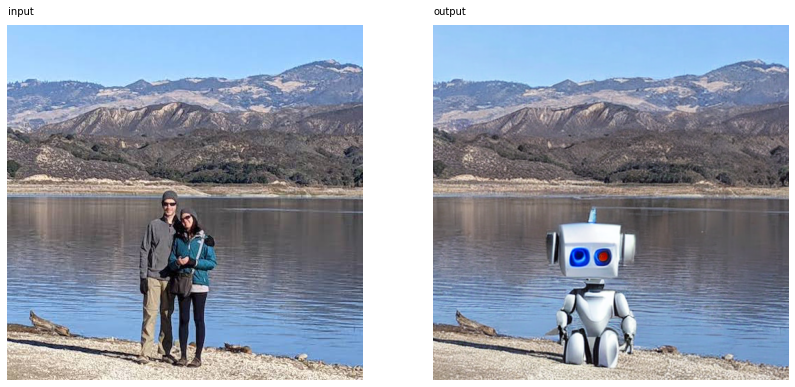

Replacing Objects (People)

In our last example, we’re replacing people with robots. The user-inputted prompts are “people” to select the man and woman in the photo, and “robot” to specify the replacement.

input_filepath = 'images/lake.jpg'

mask_prompt = 'people'

inpaint_prompt = 'robot'

Side-by-side image of the user-provided photo and the output photo:

Even the existing shadow (which was untouched by the algorithm) looks plausible as the robot’s shadow.